2024-05-15 オランダ・デルフト工科大学(TUDelft)

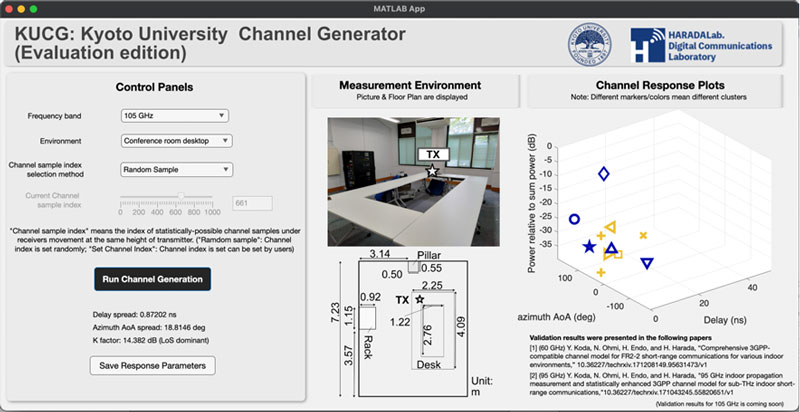

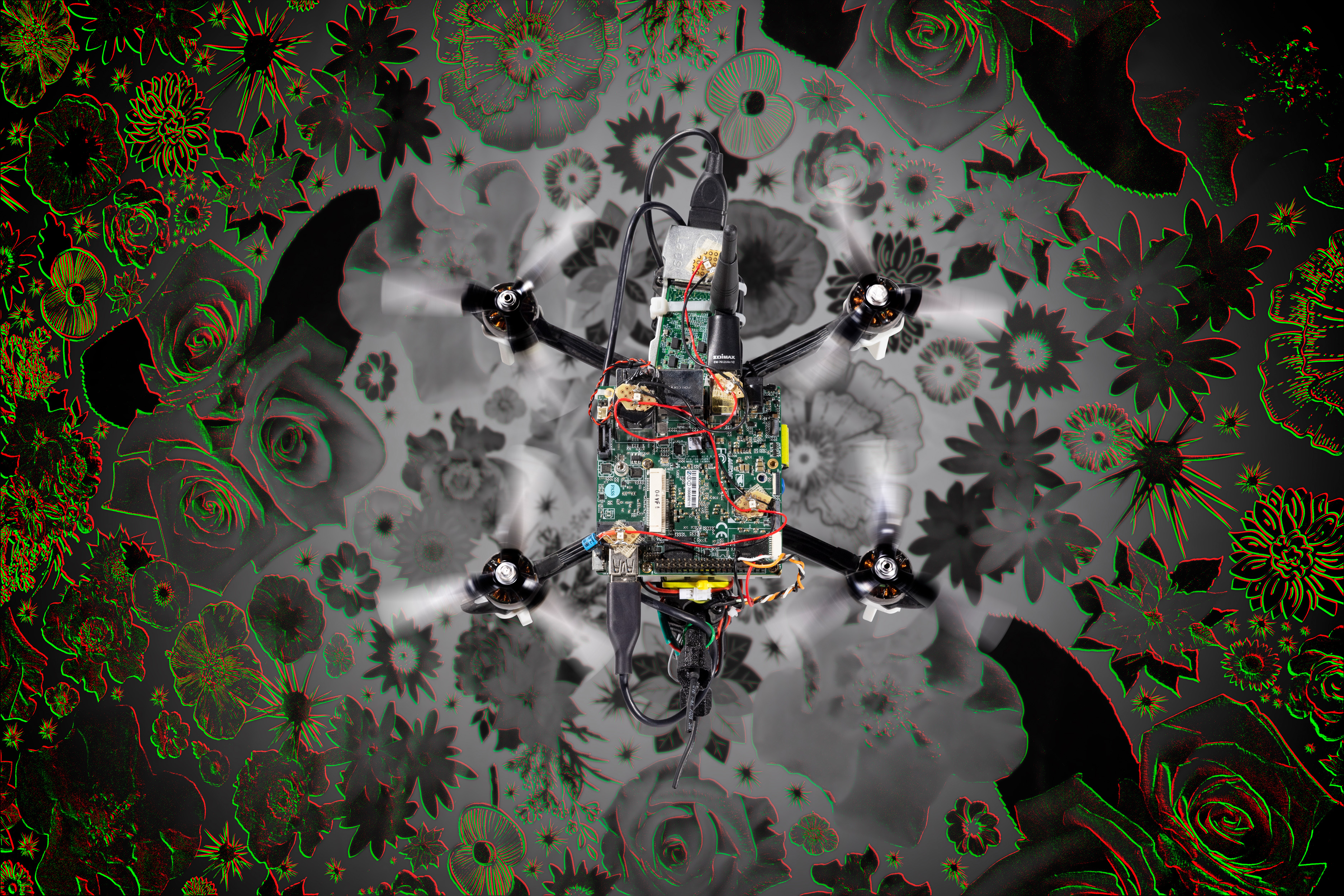

Photo of the “neuromorphic drone” flying over a flower pattern. It illustrates the visual inputs the drone receives from the neuromorphic camera in the corners. Red indicates pixels getting darker, green indicates pixels getting brighter.

Photo of the “neuromorphic drone” flying over a flower pattern. It illustrates the visual inputs the drone receives from the neuromorphic camera in the corners. Red indicates pixels getting darker, green indicates pixels getting brighter.

<関連情報>

- https://www.tudelft.nl/en/2024/tu-delft/animal-brain-inspired-ai-game-changer-for-autonomous-robots

- https://www.science.org/doi/10.1126/scirobotics.adi0591

ドローンの自律飛行のための完全なニューロモルフィック・ビジョンと制御 Fully neuromorphic vision and control for autonomous drone flight

F. PAREDES-VALLÉS, J. J. HAGENAARS, J. DUPEYROUX, S. STROOBANTS, […], AND G. C. H. E. DE CROON

Science Robotics Published:15 May 2024

DOI:https://doi.org/10.1126/scirobotics.adi0591

Editor’s summary

Despite the ability of visual processing enabled by artificial neural networks, the associated hardware and large power consumption limit deployment on small flying drones. Neuromorphic hardware offers a promising alternative, but the accompanying spiking neural networks are difficult to train, and the current hardware only supports a limited number of neurons. Paredes-Vallés et al. now present a neuromorphic pipeline to control drone flight. They trained a five-layer spiking neural network to process the raw inputs from an event camera. The network first estimated ego-motion and subsequently determined low-level control commands. Real-world experiments demonstrated that the drone could control its ego-motion to land, hover, and maneuver sideways, with minimal power consumption. —Melisa Yashinski

Abstract

Biological sensing and processing is asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions because of the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present a fully neuromorphic vision-to-control pipeline for controlling a flying drone. Specifically, we trained a spiking neural network that accepts raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28,800 neurons, maps incoming raw events to ego-motion estimates and was trained with self-supervised learning on real event data. The control part consists of a single decoding layer and was learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone could accurately control its ego-motion, allowing for hovering, landing, and maneuvering sideways—even while yawing at the same time. The neuromorphic pipeline runs on board on Intel’s Loihi neuromorphic processor with an execution frequency of 200 hertz, consuming 0.94 watt of idle power and a mere additional 7 to 12 milliwatts when running the network. These results illustrate the potential of neuromorphic sensing and processing for enabling insect-sized intelligent robots.