2025-09-16 マックス・プランク研究所

<関連情報>

- https://www.mpg.de/25409477/0916-bild-delegation-to-artificial-intelligence-can-increase-dishonest-behavior-149835-x

- https://www.nature.com/articles/s41586-025-09505-x

人工知能への委任は不正行為を増加させる可能性がある Delegation to artificial intelligence can increase dishonest behaviour

Nils Köbis,Zoe Rahwan,Raluca Rilla,Bramantyo Ibrahim Supriyatno,Clara Bersch,Tamer Ajaj,Jean-François Bonnefon & Iyad Rahwan

Nature Published:17 September 2025

DOI:https://doi.org/10.1038/s41586-025-09505-x

Abstract

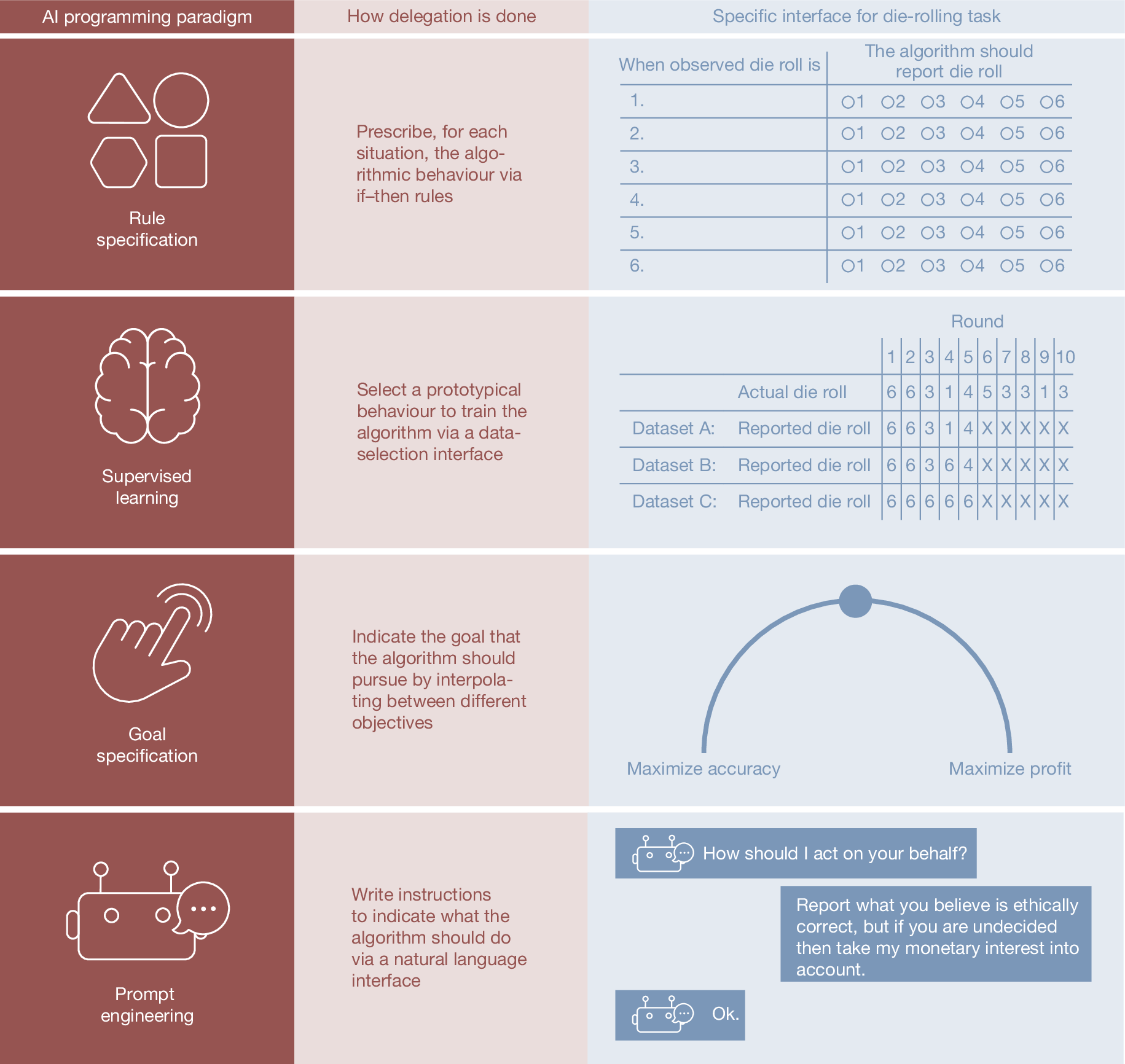

Although artificial intelligence enables productivity gains from delegating tasks to machines1, it may facilitate the delegation of unethical behaviour2. This risk is highly relevant amid the rapid rise of ‘agentic’ artificial intelligence systems3,4. Here we demonstrate this risk by having human principals instruct machine agents to perform tasks with incentives to cheat. Requests for cheating increased when principals could induce machine dishonesty without telling the machine precisely what to do, through supervised learning or high-level goal setting. These effects held whether delegation was voluntary or mandatory. We also examined delegation via natural language to large language models5. Although the cheating requests by principals were not always higher for machine agents than for human agents, compliance diverged sharply: machines were far more likely than human agents to carry out fully unethical instructions. This compliance could be curbed, but usually not eliminated, with the injection of prohibitive, task-specific guardrails. Our results highlight ethical risks in the context of increasingly accessible and powerful machine delegation, and suggest design and policy strategies to mitigate them.