2023-08-10 カーネギーメロン大学

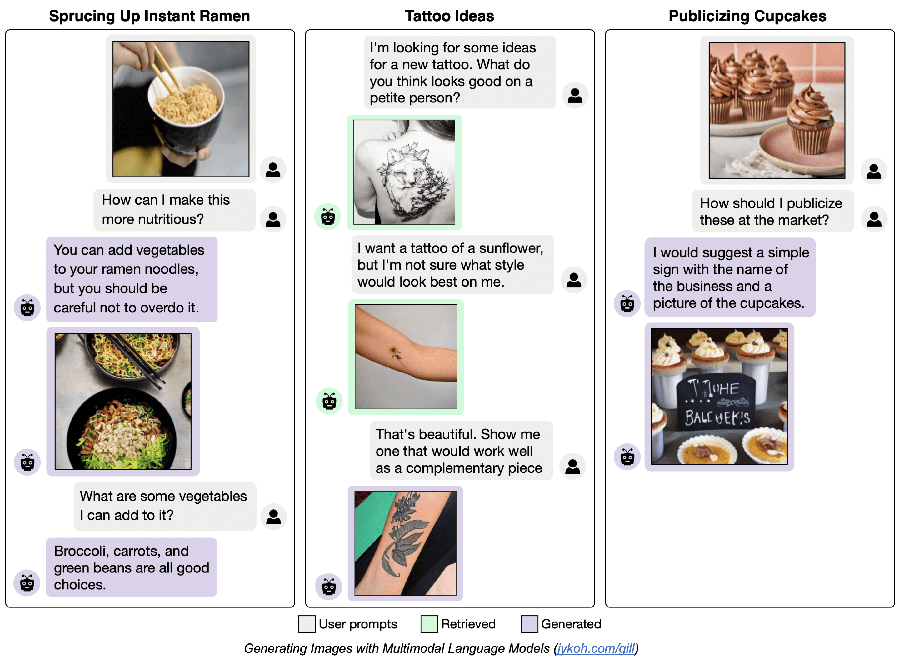

MLD and LTI researchers have developed a multimodal large language model that accepts both images and text as input, and can layer text and images in its responses (as depicted in the image above).

MLD and LTI researchers have developed a multimodal large language model that accepts both images and text as input, and can layer text and images in its responses (as depicted in the image above).

◆これはテキストと画像を入出力の両方で処理できるモデルであり、テキスト応答の他にも創造的な回答や既存の画像がない場合に画像を生成できます。また、事実に基づく回答が必要な場合にはアーカイブから画像を取得する柔軟性があります。

◆このモデルはテキストと画像を組み合わせて、テキストだけの応答よりも説明力のある画像とテキストの回答を生成することができます。研究者は今後もこのモデルの応用に期待しています。

<関連情報>

マルチモーダル言語モデルによる画像生成

Generating Images with Multimodal Language Models

Jing Yu Koh, Daniel Fried, Ruslan Salakhutdinov

arXiv Submitted on :26 May 2023

DOI:https://doi.org/10.48550/arXiv.2305.17216

We propose a method to fuse frozen text-only large language models (LLMs) with pre-trained image encoder and decoder models, by mapping between their embedding spaces. Our model demonstrates a wide suite of multimodal capabilities: image retrieval, novel image generation, and multimodal dialogue. Ours is the first approach capable of conditioning on arbitrarily interleaved image and text inputs to generate coherent image (and text) outputs. To achieve strong performance on image generation, we propose an efficient mapping network to ground the LLM to an off-the-shelf text-to-image generation model. This mapping network translates hidden representations of text into the embedding space of the visual models, enabling us to leverage the strong text representations of the LLM for visual outputs. Our approach outperforms baseline generation models on tasks with longer and more complex language. In addition to novel image generation, our model is also capable of image retrieval from a prespecified dataset, and decides whether to retrieve or generate at inference time. This is done with a learnt decision module which conditions on the hidden representations of the LLM. Our model exhibits a wider range of capabilities compared to prior multimodal language models. It can process image-and-text inputs, and produce retrieved images, generated images, and generated text — outperforming non-LLM based generation models across several text-to-image tasks that measure context dependence.