2023-06-01 ノースカロライナ州立大学(NCState)

Image Credit: Ion Fet.

Image Credit: Ion Fet.

◆PaCaは、画像のオブジェクトをより効率的に識別し、クラスタリング技術を使用して計算要件を削減します。また、クラスタリングによりモデルの解釈可能性も向上しました。研究者はPaCaを他のViTと比較し、全ての面で優れていることを確認しました。今後の課題は、より大規模なデータセットでのトレーニングを行い、PaCaを拡張することです。

<関連情報>

PaCa-ViT:ビジョントランスフォーマーにおけるパッチからクラスターアテンションを学習する。

PaCa-ViT: Learning Patch-to-Cluster Attention in Vision Transformers

Ryan Grainger, Thomas Paniagua, Xi Song, Naresh Cuntoor, Mun Wai Lee, Tianfu Wu

arXiv last revised :7 Apr 2023

DOI:https://doi.org/10.48550/arXiv.2203.11987

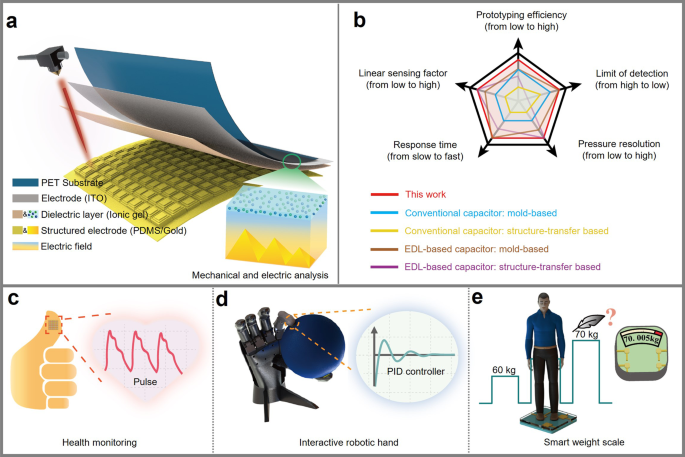

Vision Transformers (ViTs) are built on the assumption of treating image patches as “visual tokens” and learn patch-to-patch attention. The patch embedding based tokenizer has a semantic gap with respect to its counterpart, the textual tokenizer. The patch-to-patch attention suffers from the quadratic complexity issue, and also makes it non-trivial to explain learned ViTs. To address these issues in ViT, this paper proposes to learn Patch-to-Cluster attention (PaCa) in ViT. Queries in our PaCa-ViT starts with patches, while keys and values are directly based on clustering (with a predefined small number of clusters). The clusters are learned end-to-end, leading to better tokenizers and inducing joint clustering-for-attention and attention-for-clustering for better and interpretable models. The quadratic complexity is relaxed to linear complexity. The proposed PaCa module is used in designing efficient and interpretable ViT backbones and semantic segmentation head networks. In experiments, the proposed methods are tested on ImageNet-1k image classification, MS-COCO object detection and instance segmentation and MIT-ADE20k semantic segmentation. Compared with the prior art, it obtains better performance in all the three benchmarks than the SWin and the PVTs by significant margins in ImageNet-1k and MIT-ADE20k. It is also significantly more efficient than PVT models in MS-COCO and MIT-ADE20k due to the linear complexity. The learned clusters are semantically meaningful. Code and model checkpoints are available at this https URL.