2023-11-27 ワシントン大学セントルイス校

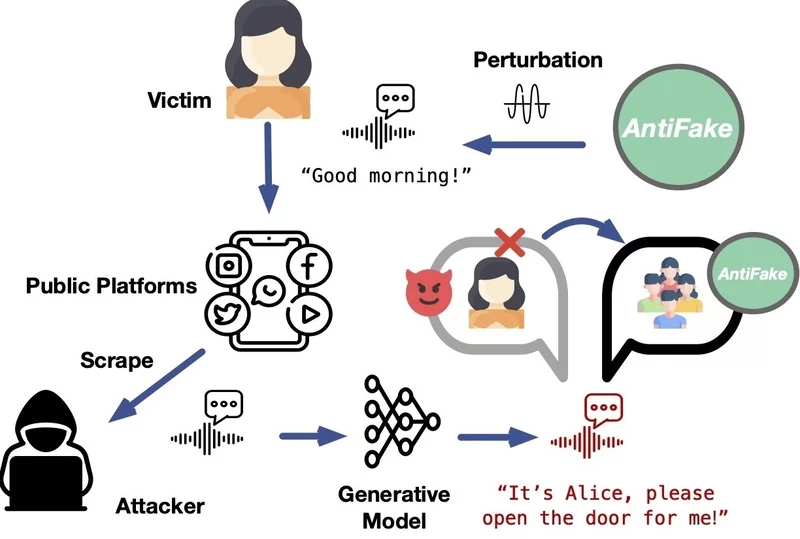

Overview of how AntiFake works. (Image courtesy of Ning Zhang)

◆従来のディープフェイク検出とは異なり、AntiFakeは積極的な姿勢を取り、敵対的手法を使用して欺瞞的な音声合成を防ぎます。このツールはユーザーに無料で提供され、音声クリップの保護だけでなく、将来的には長い録音や音楽の保護にも拡張可能です。 AntiFakeは敵対的攻撃に対して効果的であり、柔軟に対応できる設計です。

<関連情報>

- https://source.wustl.edu/2023/11/defending-your-voice-against-deepfakes/

- https://engineering.wustl.edu/news/2023/Defending-your-voice-against-deepfakes.html

- https://dl.acm.org/doi/10.1145/3576915.3623209

アンチフェイク: 不正音声合成を防御するため敵対的な音声を利用する AntiFake: Using Adversarial Audio to Prevent Unauthorized Speech Synthesis

CCS ’23: Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security Published:21 November 2023

DOI:https://doi.org/10.1145/3576915.3623209

ABSTRACT

The rapid development of deep neural networks and generative AI has catalyzed growth in realistic speech synthesis. While this technology has great potential to improve lives, it also leads to the emergence of ”DeepFake” where synthesized speech can be misused to deceive humans and machines for nefarious purposes. In response to this evolving threat, there has been a significant amount of interest in mitigating this threat by DeepFake detection.

Complementary to the existing work, we propose to take the preventative approach and introduce AntiFake, a defense mechanism that relies on adversarial examples to prevent unauthorized speech synthesis. To ensure the transferability to attackers’ unknown synthesis models, an ensemble learning approach is adopted to improve the generalizability of the optimization process. To validate the efficacy of the proposed system, we evaluated AntiFake against five state-of-the-art synthesizers using real-world DeepFake speech samples. The experiments indicated that AntiFake achieved over 95% protection rate even to unknown black-box models. We have also conducted usability tests involving 24 human participants to ensure the solution is accessible to diverse populations.