2025-01-07 スイス連邦工科大学ローザンヌ校

<関連情報>

- https://actu.epfl.ch/news/an-open-source-training-framework-to-advance-multi/

- https://arxiv.org/abs/2406.09406

- https://4m.epfl.ch/

4M-21: 数十のタスクとモダリティのためのAny-to-Any視覚モデル 4M-21: An Any-to-Any Vision Model for Tens of Tasks and Modalities

Roman Bachmann, Oğuzhan Fatih Kar, David Mizrahi, Ali Garjani, Mingfei Gao, David Griffiths, Jiaming Hu, Afshin Dehghan, Amir Zamir

arXiv last revised 14 Jun 2024 (this version, v2)

DOI:https://arxiv.org/abs/2406.09406v2

Abstract

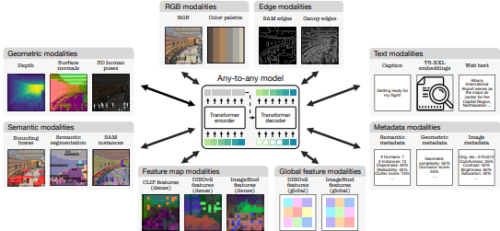

Current multimodal and multitask foundation models like 4M or UnifiedIO show promising results, but in practice their out-of-the-box abilities to accept diverse inputs and perform diverse tasks are limited by the (usually rather small) number of modalities and tasks they are trained on. In this paper, we expand upon the capabilities of them by training a single model on tens of highly diverse modalities and by performing co-training on large-scale multimodal datasets and text corpora. This includes training on several semantic and geometric modalities, feature maps from recent state of the art models like DINOv2 and ImageBind, pseudo labels of specialist models like SAM and 4DHumans, and a range of new modalities that allow for novel ways to interact with the model and steer the generation, for example image metadata or color palettes. A crucial step in this process is performing discrete tokenization on various modalities, whether they are image-like, neural network feature maps, vectors, structured data like instance segmentation or human poses, or data that can be represented as text. Through this, we expand on the out-of-the-box capabilities of multimodal models and specifically show the possibility of training one model to solve at least 3x more tasks/modalities than existing ones and doing so without a loss in performance. This enables more fine-grained and controllable multimodal generation capabilities and allows us to study the distillation of models trained on diverse data and objectives into a unified model. We successfully scale the training to a three billion parameter model using tens of modalities and different datasets. The resulting models and training code are open sourced at this http URL.

大規模マルチモーダル マスクモデリング あらゆるものからあらゆるものへのマルチモーダル基盤モデルをトレーニングするためのフレームワーク。スケーラブル。オープンソース。数十のモダリティとタスクに対応。 Massively Multimodal Masked Modeling A framework for training any-to-any multimodal foundation models. Scalable. Open-sourced. Across tens of modalities and tasks.

4M enables training versatile multimodal and multitask models, capable of performing a diverse set of vision tasks out of the box, as well as being able to perform multimodal conditional generation. This, coupled with the models’ ability to perform in-painting, enables powerful image editing capabilities. These generalist models transfer well to a broad range of downstream tasks or to novel modalities, and can be easily fine-tuned into more specialized variants of itself.

Summary

Current machine learning models for vision are often highly specialized and limited to a single modality and task. In contrast, recent large language models exhibit a wide range of capabilities, hinting at a possibility for similarly versatile models in computer vision.

We take a step in this direction and propose a multimodal training scheme called 4M, short for Massively Multimodal Masked Modeling. It consists of training a single unified Transformer encoder-decoder using a masked modeling objective across a wide range of input/output modalities — including text, images, geometric, and semantic modalities, as well as neural network feature maps. 4M achieves scalability by unifying the representation space of all modalities through mapping them into discrete tokens and performing multimodal masked modeling on a small randomized subset of tokens.

4M leads to models that exhibit several key capabilities:

they can perform a diverse set of vision tasks out of the box,

they excel when fine-tuned for unseen downstream tasks or new input modalities, and

they can function as a generative model that can be conditioned on arbitrary modalities, enabling a wide variety of expressive multimodal editing capabilities with remarkable flexibility.

Through experimental analyses, we demonstrate the potential of 4M for training versatile and scalable foundation models for vision tasks, setting the stage for further exploration in multimodal learning for vision and other domains. Please see our GitHub repository for code and pre-trained models.