2024-04-09 アルゴンヌ国立研究所(ANL)

<関連情報>

- https://www.anl.gov/article/new-code-mines-microscopy-images-in-scientific-articles

- https://www.sciencedirect.com/science/article/pii/S2666389923002222?via%3Dihub

EXSCLAIM!:自己ラベル化された顕微鏡データセットのための材料科学文献の活用 EXSCLAIM!: Harnessing materials science literature for self-labeled microscopy datasets

Eric Schwenker, Weixin Jiang, Trevor Spreadbury, Nicola Ferrier, Oliver Cossairt, Maria K.Y. Chan

Patterns Published: October 2, 2023

DOI:https://doi.org/10.1016/j.patter.2023.100843

Highlights

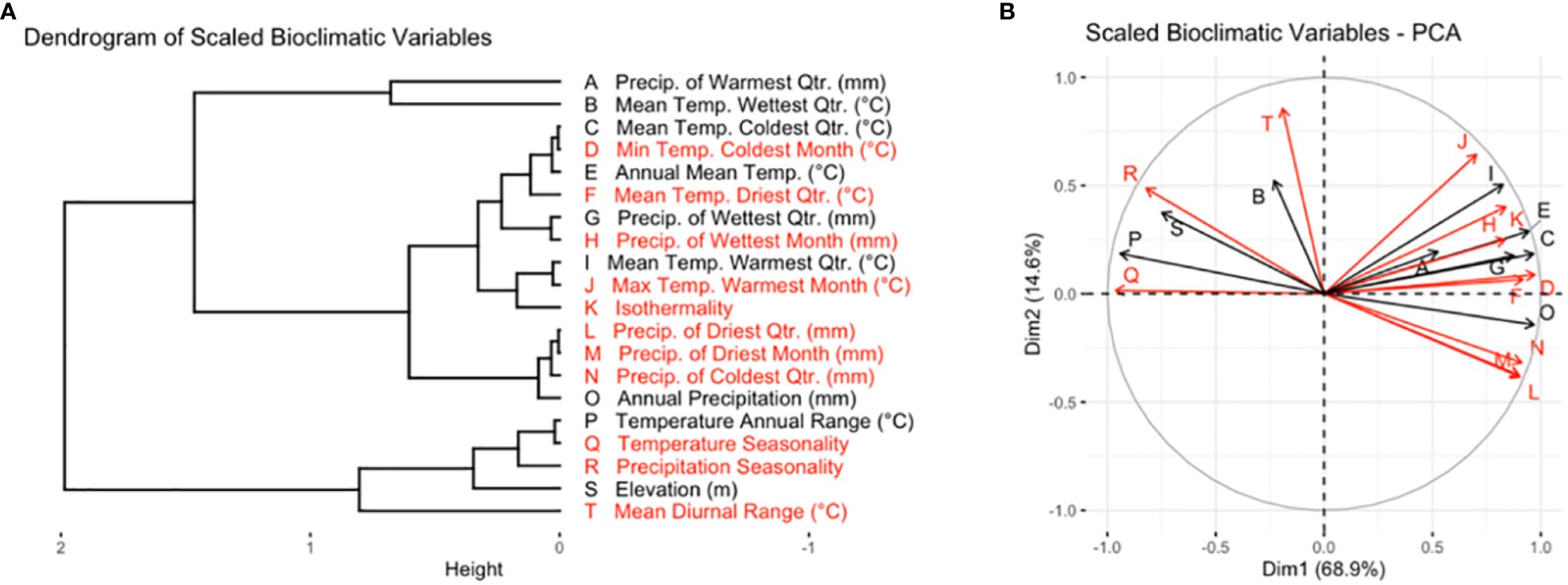

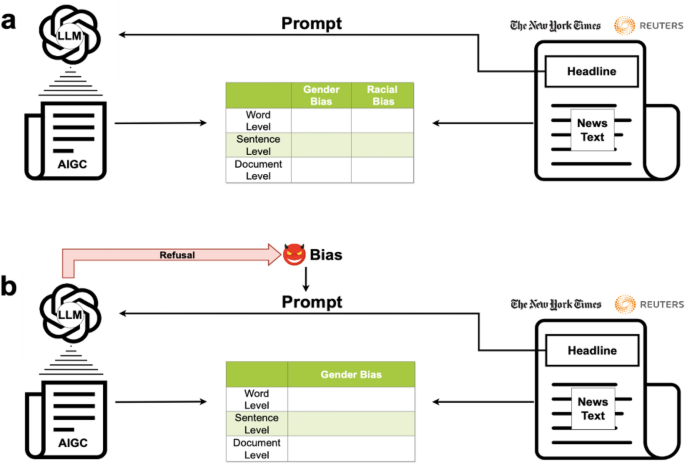

•Software pipeline for creating self-annotated imaging datasets from science journals

•Design uses configurable modules to scrape and process scientific figures/captions

•Initial applications focus on materials microscopy, but techniques apply generally

•Validation provided for figure separation and NLP caption distribution accuracy

The bigger picture

Due to recent improvements in image resolution and acquisition speed, materials microscopy is experiencing an explosion of published imaging data. The standard publication format, while sufficient for data ingestion scenarios where a selection of images can be critically examined and curated manually, is not conducive to large-scale data aggregation or analysis, hindering data sharing and reuse. Most images in publications are part of a larger figure, with their explicit context buried in the main body or caption text; so even if aggregated, collections of images with weak or no digitized contextual labels have limited value. The tool developed in this work establishes a scalable pipeline for meaningful image-/language-based information curation from scientific literature.

Summary

This work introduces the EXSCLAIM! toolkit for the automatic extraction, separation, and caption-based natural language annotation of images from scientific literature. EXSCLAIM! is used to show how rule-based natural language processing and image recognition can be leveraged to construct an electron microscopy dataset containing thousands of keyword-annotated nanostructure images. Moreover, it is demonstrated how a combination of statistical topic modeling and semantic word similarity comparisons can be used to increase the number and variety of keyword annotations on top of the standard annotations from EXSCLAIM! With large-scale imaging datasets constructed from scientific literature, users are well positioned to train neural networks for classification and recognition tasks specific to microscopy—tasks often otherwise inhibited by a lack of sufficient annotated training data.