2024-02-07 スイス連邦工科大学ローザンヌ校(EPFL)

◆EPFLの研究では、大量のテキストで事前にトレーニングされたGPT-3などの大規模言語モデルを使用して、化学分析を大幅に簡素化する新しい手法を発見しました。この手法は、人工知能のモデルを微調整し、正確な化学的洞察を提供することができるようにします。彼らの研究は、化学問題のほぼ95%以上を正しく回答し、従来の機械学習モデルの精度をしばしば上回ることが示されました。従来の手法よりも簡単で迅速に化学研究を行うことが可能になります。

<関連情報>

- https://actu.epfl.ch/news/gpt-3-transforms-chemical-research/

- https://www.nature.com/articles/s42256-023-00788-1

予測化学のための大規模言語モデルの活用 Leveraging large language models for predictive chemistry

Kevin Maik Jablonka,Philippe Schwaller,Andres Ortega-Guerrero & Berend Smit

Nature Machine Intelligence Published:06 February 2024

DOI:https://doi.org/10.1038/s42256-023-00788-1

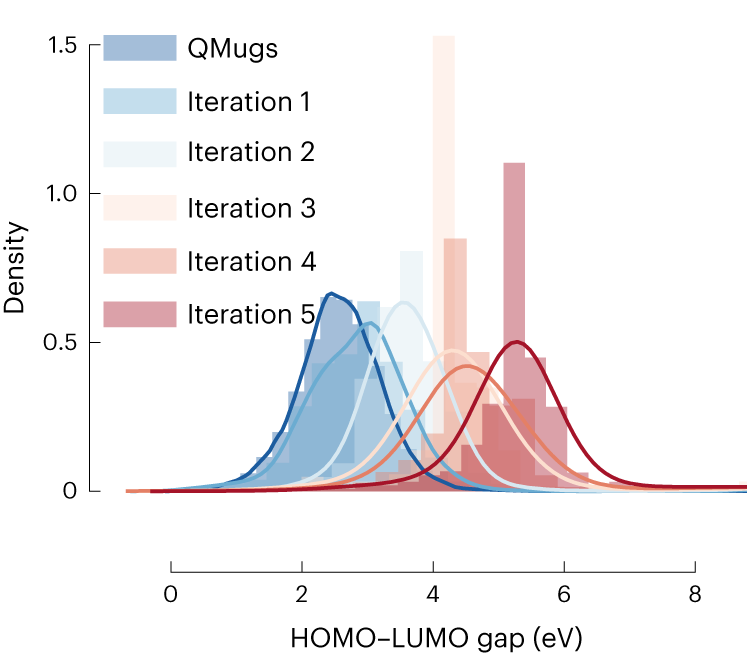

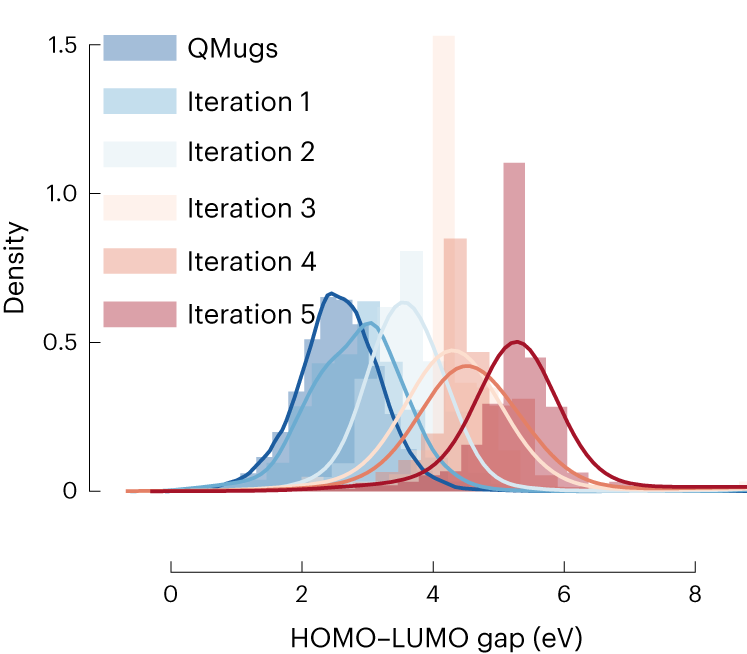

Fig. 5: Iteratively biased generation of molecules towards large HOMO–LUMO gaps using GPT-3 fine-tuned on the QMugs dataset of draws.

Abstract

Machine learning has transformed many fields and has recently found applications in chemistry and materials science. The small datasets commonly found in chemistry sparked the development of sophisticated machine learning approaches that incorporate chemical knowledge for each application and, therefore, require specialized expertise to develop. Here we show that GPT-3, a large language model trained on vast amounts of text extracted from the Internet, can easily be adapted to solve various tasks in chemistry and materials science by fine-tuning it to answer chemical questions in natural language with the correct answer. We compared this approach with dedicated machine learning models for many applications spanning the properties of molecules and materials to the yield of chemical reactions. Surprisingly, our fine-tuned version of GPT-3 can perform comparably to or even outperform conventional machine learning techniques, in particular in the low-data limit. In addition, we can perform inverse design by simply inverting the questions. The ease of use and high performance, especially for small datasets, can impact the fundamental approach to using machine learning in the chemical and material sciences. In addition to a literature search, querying a pre-trained large language model might become a routine way to bootstrap a project by leveraging the collective knowledge encoded in these foundation models, or to provide a baseline for predictive tasks.