2025-04-23 東京科学大学

<関連情報>

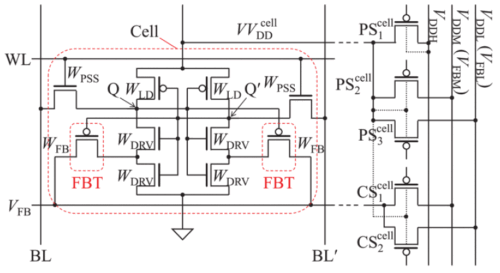

二値化ニューラルネットワーク並列処理アクセラレータマクロのエネルギー効率100TOPS/W超設計 Binarized Neural-Network Parallel-Processing Accelerator Macro Designed for an Energy Efficiency Higher Than 100 TOPS/W

Yusaku Shiotsu; Satoshi Sugahara

IEEE Journal on Exploratory Solid-State Computational Devices and Circuits Published:04 February 2025

DOI:https://doi.org/10.1109/JXCDC.2025.3538702

Abstract

A binarized neural-network (BNN) accelerator macro is developed based on a processing-in-memory (PIM) architecture having the ability of eight-parallel multiply-accumulate (MAC) processing. The parallel-processing PIM macro, referred to as a PPIM macro, is designed to perform the parallel processing with no use of multiport SRAM cells and to achieve the energy minimum point (EMP) operation for inference. The proposed memory array in the PPIM macro is configured with single-port Schmitt-trigger-type cells just by adding multiple bit lines with spatial address mapping modulation, resulting in a highly area-efficient cell array. The EMP operation of the developed PPIM macro can maximize the energy efficiency. As a result, an energy efficiency higher than 100 tera-operations-per-second per Watt (TOPS/W) can be achieved at around the EMP voltage. The EMP operation is also beneficial for enhancing the processing performance [measured in units of tera-operations per second (TOPS)] of the macro. The performance of fully connected-layer (FCL) networks configured with a multiple of the PPIM macro is also demonstrated.