2024-12-19 スイス連邦工科大学ローザンヌ校(EPFL)

<関連情報>

- https://actu.epfl.ch/news/can-we-convince-ai-to-answer-harmful-requests/

- https://arxiv.org/abs/2404.02151

簡単な適応的攻撃で安全性の高いLLMを脱獄する Jailbreaking Leading Safety-Aligned LLMs with Simple Adaptive Attacks

Maksym Andriushchenko, Francesco Croce, Nicolas Flammarion

arXiv last revised 7 Oct 2024 (this version, v3)

DOI:https://doi.org/10.48550/arXiv.2404.02151

Abstract

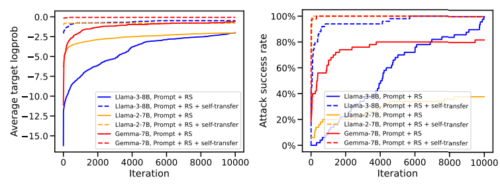

We show that even the most recent safety-aligned LLMs are not robust to simple adaptive jailbreaking attacks. First, we demonstrate how to successfully leverage access to logprobs for jailbreaking: we initially design an adversarial prompt template (sometimes adapted to the target LLM), and then we apply random search on a suffix to maximize a target logprob (e.g., of the token “Sure”), potentially with multiple restarts. In this way, we achieve 100% attack success rate — according to GPT-4 as a judge — on Vicuna-13B, Mistral-7B, Phi-3-Mini, Nemotron-4-340B, Llama-2-Chat-7B/13B/70B, Llama-3-Instruct-8B, Gemma-7B, GPT-3.5, GPT-4o, and R2D2 from HarmBench that was adversarially trained against the GCG attack. We also show how to jailbreak all Claude models — that do not expose logprobs — via either a transfer or prefilling attack with a 100% success rate. In addition, we show how to use random search on a restricted set of tokens for finding trojan strings in poisoned models — a task that shares many similarities with jailbreaking — which is the algorithm that brought us the first place in the SaTML’24 Trojan Detection Competition. The common theme behind these attacks is that adaptivity is crucial: different models are vulnerable to different prompting templates (e.g., R2D2 is very sensitive to in-context learning prompts), some models have unique vulnerabilities based on their APIs (e.g., prefilling for Claude), and in some settings, it is crucial to restrict the token search space based on prior knowledge (e.g., for trojan detection). For reproducibility purposes, we provide the code, logs, and jailbreak artifacts in the JailbreakBench format at this https URL.