2023-05-23 カーネギーメロン大学

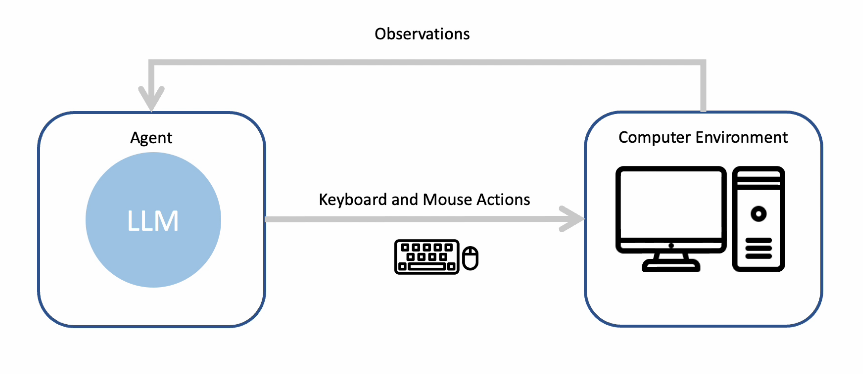

CMU-led research shows that large language models can perform tedious or repetitive tasks by completing keyboard and mouse actions.

◆研究では、大規模な言語モデルがキーボードやマウスの操作を通じて一般的なコンピュータータスクを実行できることが示され、その応用の可能性が広がっています。

◆この技術は、作業の改善や生産性向上、効率化をもたらし、経済成長を促進し、人々が貴重な時間を自分の好きなことに使えるようにする可能性があります。ただし、AIの進歩には注意が必要であり、ルールや規制、保護策の議論が必要です。

<関連情報>

言語モデルでコンピュータのタスクを解決できる

Language Models can Solve Computer Tasks

Geunwoo Kim, Pierre Baldi, Stephen McAleer

arXiv Submitted on: 30 Mar 2023

DOI:https://doi.org/10.48550/arXiv.2303.17491

Agents capable of carrying out general tasks on a computer can improve efficiency and productivity by automating repetitive tasks and assisting in complex problem-solving. Ideally, such agents should be able to solve new computer tasks presented to them through natural language commands. However, previous approaches to this problem require large amounts of expert demonstrations and task-specific reward functions, both of which are impractical for new tasks. In this work, we show that a pre-trained large language model (LLM) agent can execute computer tasks guided by natural language using a simple prompting scheme where the agent recursively criticizes and improves its output (RCI). The RCI approach significantly outperforms existing LLM methods for automating computer tasks and surpasses supervised learning (SL) and reinforcement learning (RL) approaches on the MiniWoB++ benchmark. RCI is competitive with the state-of-the-art SL+RL method, using only a handful of demonstrations per task rather than tens of thousands, and without a task-specific reward function. Furthermore, we demonstrate RCI prompting’s effectiveness in enhancing LLMs’ reasoning abilities on a suite of natural language reasoning tasks, outperforming chain of thought (CoT) prompting. We find that RCI combined with CoT performs better than either separately.