2025-12-02 東京理科大学,産業技術総合研究所

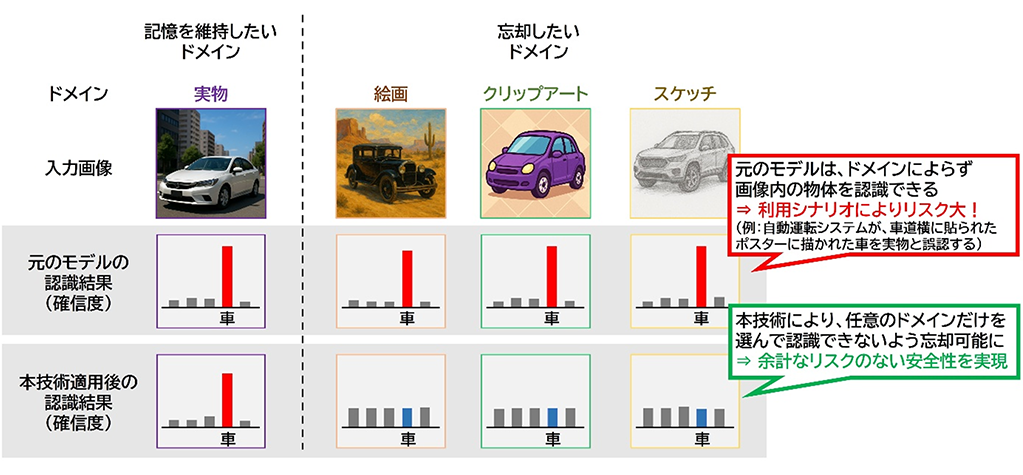

図1: 近似ドメインアンラーニング

<関連情報>

- https://www.tus.ac.jp/today/archive/20251202_8376.html

- https://kodaikawamura.github.io/Domain_Unlearning/

視覚言語モデルのための近似ドメイン反学習 Approximate Domain Unlearning for Vision-Language Models

Kodai Kawamura, Yuta Goto, Rintaro Yanagi, Hirokatsu Kataoka, Go Irie

Neural Information Processing Systems (NeurIPS 2025)

Abstract

Pre-trained Vision-Language Models (VLMs) exhibit strong generalization capabilities, enabling them to recognize a wide range of objects across diverse domains without additional training. However, they often retain irrelevant information beyond the requirements of specific target downstream tasks, raising concerns about computational efficiency and potential information leakage. This has motivated growing interest in approximate unlearning, which aims to selectively remove unnecessary knowledge while preserving overall model performance. Existing approaches to approximate unlearning have primarily focused on class unlearning, where a VLM is retrained to fail to recognize specified object classes while maintaining accuracy for others. However, merely forgetting object classes is often insufficient in practical applications. For instance, an autonomous driving system should accurately recognize real cars, while avoiding misrecognition of illustrated cars depicted in roadside advertisements as real cars, which could be hazardous. In this paper, we introduce Approximate Domain Unlearning (ADU), a novel problem setting that requires reducing recognition accuracy for images from specified domains (e.g., illustration) while preserving accuracy for other domains (e.g., real). ADU presents new technical challenges: due to the strong domain generalization capability of pre-trained VLMs, domain distributions are highly entangled in the feature space, making naive approaches based on penalizing target domains ineffective. To tackle this limitation, we propose a novel approach that explicitly disentangles domain distributions and adaptively captures instance-specific domain information. Extensive experiments on three multi-domain benchmark datasets demonstrate that our approach significantly outperforms strong baselines built upon state-of-the-art VLM tuning techniques, paving the way for practical and fine-grained unlearning in VLMs.