2025-10-28 北海道大学

提案⼿法による蒸留した画像の例

<関連情報>

- https://www.hokudai.ac.jp/news/2025/10/ai-8.html

- https://www.hokudai.ac.jp/news/pdf/251028_pr2.pdf

- https://arxiv.org/abs/2505.24623

- https://arxiv.org/abs/2507.04619

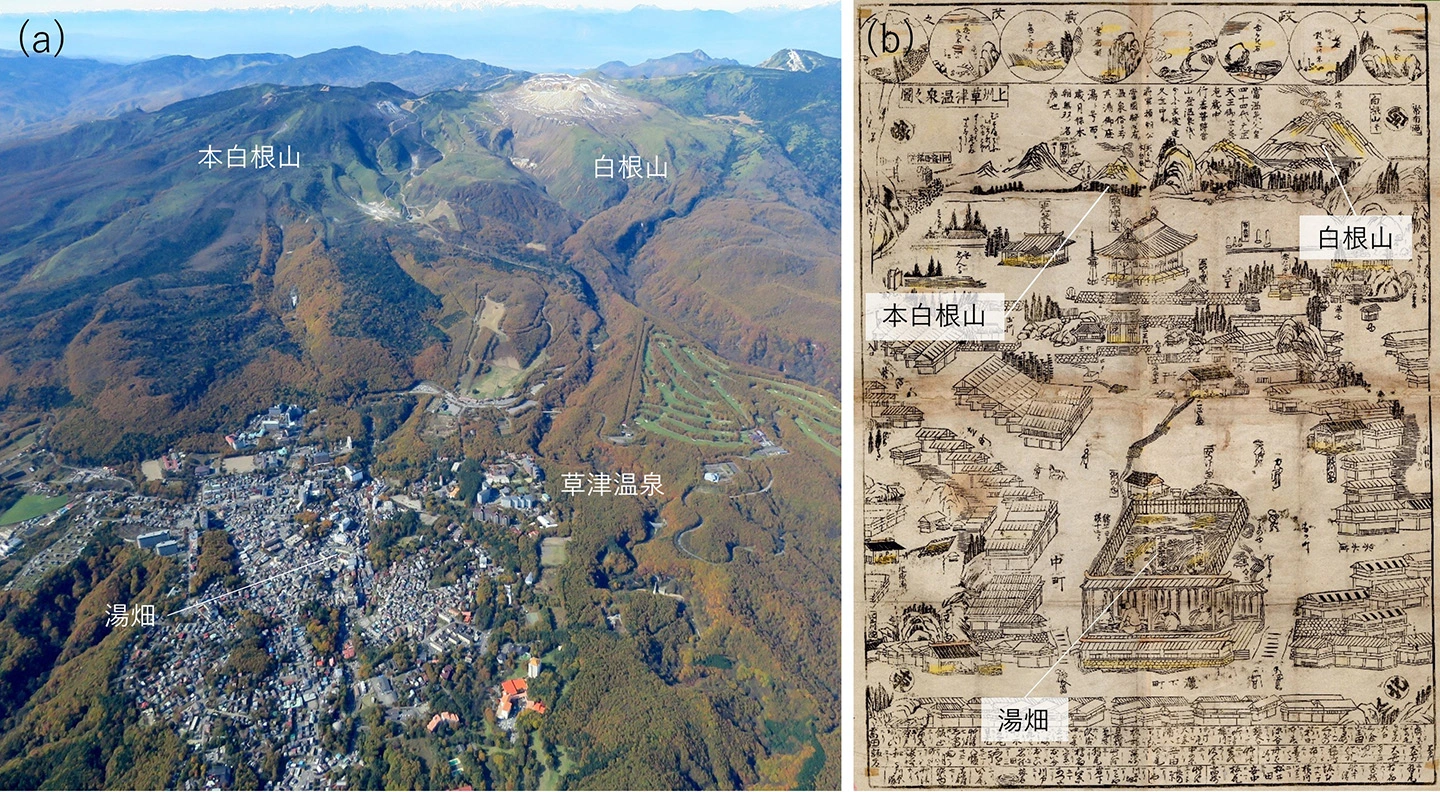

双曲データセット蒸留 Hyperbolic Dataset Distillation

Wenyuan Li, Guang Li, Keisuke Maeda, Takahiro Ogawa, Miki Haseyama

arXiv last revised 17 Oct 2025 (this version, v2)

DOI:https://doi.org/10.48550/arXiv.2505.24623

Abstract

To address the computational and storage challenges posed by large-scale datasets in deep learning, dataset distillation has been proposed to synthesize a compact dataset that replaces the original while maintaining comparable model performance. Unlike optimization-based approaches that require costly bi-level optimization, distribution matching (DM) methods improve efficiency by aligning the distributions of synthetic and original data, thereby eliminating nested optimization. DM achieves high computational efficiency and has emerged as a promising solution. However, existing DM methods, constrained to Euclidean space, treat data as independent and identically distributed points, overlooking complex geometric and hierarchical relationships. To overcome this limitation, we propose a novel hyperbolic dataset distillation method, termed HDD. Hyperbolic space, characterized by negative curvature and exponential volume growth with distance, naturally models hierarchical and tree-like structures. HDD embeds features extracted by a shallow network into the Lorentz hyperbolic space, where the discrepancy between synthetic and original data is measured by the hyperbolic (geodesic) distance between their centroids. By optimizing this distance, the hierarchical structure is explicitly integrated into the distillation process, guiding synthetic samples to gravitate towards the root-centric regions of the original data distribution while preserving their underlying geometric characteristics. Furthermore, we find that pruning in hyperbolic space requires only 20% of the distilled core set to retain model performance, while significantly improving training stability. To the best of our knowledge, this is the first work to incorporate the hyperbolic space into the dataset distillation process. The code is available at this https URL.

データセット蒸留のための情報誘導拡散サンプリング Information-Guided Diffusion Sampling for Dataset Distillation

Linfeng Ye, Shayan Mohajer Hamidi, Guang Li, Takahiro Ogawa, Miki Haseyama, Konstantinos N. Plataniotis

arXiv Submitted on 7 Jul 2025

DOI:https://doi.org/10.48550/arXiv.2507.04619

Abstract

Dataset distillation aims to create a compact dataset that retains essential information while maintaining model performance. Diffusion models (DMs) have shown promise for this task but struggle in low images-per-class (IPC) settings, where generated samples lack diversity. In this paper, we address this issue from an information-theoretic perspective by identifying two key types of information that a distilled dataset must preserve: ( i ) prototype information I( X ; Y ), which captures label-relevant features; and (ii ) contextual information H( X|Y ), which preserves intra-class variability. Here, (X, Y ) represents the pair of random variables corresponding to the input data and its ground truth label, respectively. Observing that the required contextual information scales with IPC, we propose maximizing I( X ; Y ) + βH( X|Y ) during the DM sampling process, where β is IPC-dependent. Since directly computing I( X ; Y ) and H( X|Y ) is intractable, we develop variational estimations to tightly lower-bound these quantities via a data-driven approach. Our approach, informationguided diffusion sampling (IGDS), seamlessly integrates with diffusion models and improves dataset distillation across all IPC settings. Experiments on Tiny ImageNet and ImageNet subsets show that IGDS significantly outperforms existing methods, particularly in low-IPC regimes. The code will be released upon acceptance.