2025-02-11 カリフォルニア大学サンディエゴ校 (UCSD)

<関連情報>

- https://today.ucsd.edu/story/uc-san-diego-machine-learning-initiative-aims-to-advance-ai-systems

- https://arxiv.org/abs/2401.09670

- https://dl.acm.org/doi/10.1145/3650212.3652141

DistServe: Goodputに最適化された大規模言語モデルサービングのためのプリフィルとデコードの分離 DistServe: Disaggregating Prefill and Decoding for Goodput-optimized Large Language Model Serving

Yinmin Zhong, Shengyu Liu, Junda Chen, Jianbo Hu, Yibo Zhu, Xuanzhe Liu, Xin Jin, Hao Zhang

arXiv last revised 6 Jun 2024 (this version, v3)

DOI:https://doi.org/10.48550/arXiv.2401.09670

Abstract

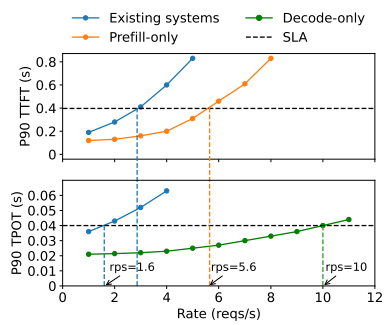

DistServe improves the performance of large language models (LLMs) serving by disaggregating the prefill and decoding computation. Existing LLM serving systems colocate the two phases and batch the computation of prefill and decoding across all users and requests. We find that this strategy not only leads to strong prefill-decoding interferences but also couples the resource allocation and parallelism plans for both phases. LLM applications often emphasize individual latency for each phase: time to first token (TTFT) for the prefill phase and time per output token (TPOT) of each request for the decoding phase. In the presence of stringent latency requirements, existing systems have to prioritize one latency over the other, or over-provision compute resources to meet both.

DistServe assigns prefill and decoding computation to different GPUs, hence eliminating prefill-decoding interferences. Given the application’s TTFT and TPOT requirements, DistServe co-optimizes the resource allocation and parallelism strategy tailored for each phase. DistServe also places the two phases according to the serving cluster’s bandwidth to minimize the communication caused by disaggregation. As a result, DistServe significantly improves LLM serving performance in terms of the maximum rate that can be served within both TTFT and TPOT constraints on each GPU. Our evaluations show that on various popular LLMs, applications, and latency requirements, DistServe can serve 7.4x more requests or 12.6x tighter SLO, compared to state-of-the-art systems, while staying within latency constraints for > 90% of requests.

WebAssemblyリバースエンジニアリングのためのマルチモーダル学習 Multi-modal Learning for WebAssembly Reverse Engineering

Hanxian Huang, Jishen Zhao

ISSTA 2024: Proceedings of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis Published: 11 September 2024

DOI:https://doi.org/10.1145/3650212.3652141

Abstract

The increasing adoption of WebAssembly (Wasm) for performance-critical and security-sensitive tasks drives the demand for WebAssembly program comprehension and reverse engineering. Recent studies have introduced machine learning (ML)-based WebAssembly reverse engineering tools. Yet, the generalization of task-specific ML solutions remains challenging, because their effectiveness hinges on the availability of an ample supply of high-quality task-specific labeled data. Moreover, previous works trained models only with features extracted from WebAssembly, overlooking the high-level semantics present in the corresponding source code and its documentation. Acknowledging the abundance of available source code with documentation, which can be compiled into WebAssembly, we propose to learn representations of them concurrently and harness their mutual relationships for effective WebAssembly reverse engineering. In this paper, we present WasmRev, the first multi-modal pre-trained language model for WebAssembly reverse engineering. WasmRev is pre-trained using self-supervised learning on a large-scale multi-modal corpus encompassing source code, code documentation and the compiled WebAssembly, without requiring labeled data. WasmRev incorporates three tailored multi-modal pre-training tasks to capture various characteristics of WebAssembly and cross-modal relationships. WasmRev is only trained once to produce general-purpose representations that can broadly support WebAssembly reverse engineering tasks through few-shot fine-tuning with much less labeled data, improving data efficiency. We fine-tune WasmRev onto three important reverse engineering tasks: type recovery, function purpose identification and WebAssembly summarization. Our results show that WasmRev pre-trained on the corpus of multi-modal samples establishes a robust foundation for these tasks, achieving high task accuracy and outperforming the state-of-the-art ML methods for WebAssembly reverse engineering.