2024-09-16 スイス連邦工科大学ローザンヌ校(EPFL)

<関連情報>

- https://actu.epfl.ch/news/large-language-models-feel-the-direction-of-time/

- https://arxiv.org/abs/2401.17505

大規模言語モデルの時間の矢 Arrows of Time for Large Language Models

Vassilis Papadopoulos,Jérémie Wenger,Clément Hongler

arXiv last revised 24 Jul 2024 (this version, v4)

DOI:https://doi.org/10.48550/arXiv.2401.17505

Abstract

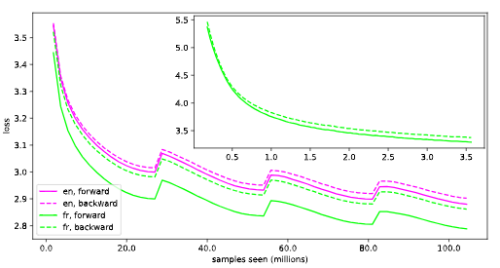

We study the probabilistic modeling performed by Autoregressive Large Language Models (LLMs) through the angle of time directionality, addressing a question first raised in (Shannon, 1951). For large enough models, we empirically find a time asymmetry in their ability to learn natural language: a difference in the average log-perplexity when trying to predict the next token versus when trying to predict the previous one. This difference is at the same time subtle and very consistent across various modalities (language, model size, training time, …). Theoretically, this is surprising: from an information-theoretic point of view, there should be no such difference. We provide a theoretical framework to explain how such an asymmetry can appear from sparsity and computational complexity considerations, and outline a number of perspectives opened by our results.