2026-02-04 ワシントン大学(UW)

<関連情報>

- https://www.washington.edu/news/2026/02/04/in-a-study-ai-model-openscholar-synthesizes-scientific-research-and-cites-sources-as-accurately-as-human-experts/

- https://www.nature.com/articles/s41586-025-10072-4/figures/1

検索強化言語モデルを用いた科学文献の統合 Synthesizing scientific literature with retrieval-augmented language models

Akari Asai,Jacqueline He,Rulin Shao,Weijia Shi,Amanpreet Singh,Joseph Chee Chang,Kyle Lo,Luca Soldaini,Sergey Feldman,Mike D’Arcy,David Wadden,Matt Latzke,Jenna Sparks,Jena D. Hwang,Varsha Kishore,Minyang Tian,Pan Ji,Shengyan Liu,Hao Tong,Bohao Wu,Yanyu Xiong,Luke Zettlemoyer,Graham Neubig,Daniel S. Weld,… Hannaneh Hajishirzi

Nature Published:04 February 2026

DOI:https://doi.org/10.1038/s41586-025-10072-4

Abstract

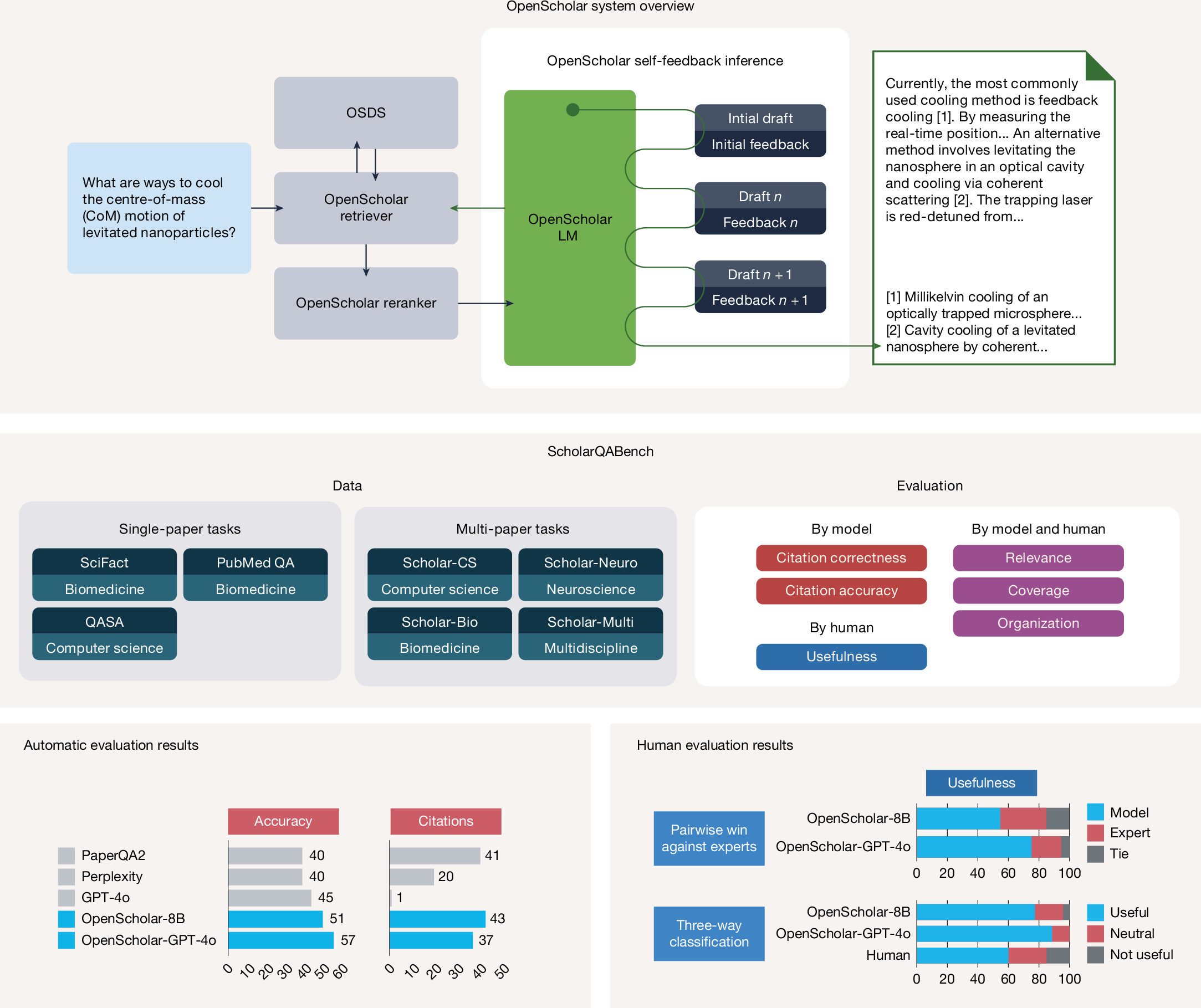

Scientific progress depends on the ability of researchers to synthesize the growing body of literature. Can large language models (LLMs) assist scientists in this task? Here we introduce OpenScholar, a specialized retrieval-augmented language model (LM)1 that answers scientific queries by identifying relevant passages from 45 million open-access papers and synthesizing citation-backed responses. To evaluate OpenScholar, we develop ScholarQABench, the first large-scale multi-domain benchmark for literature search, comprising 2,967 expert-written queries and 208 long-form answers across computer science, physics, neuroscience and biomedicine. Despite being a smaller open model, OpenScholar-8B outperforms GPT-4o by 6.1% and PaperQA2 by 5.5% in correctness on a challenging multi-paper synthesis task from the new ScholarQABench. Although GPT-4o hallucinates citations 78–90% of the time, OpenScholar achieves citation accuracy on par with human experts. OpenScholar’s data store, retriever and self-feedback inference loop improve off-the-shelf LMs: for instance, OpenScholar-GPT-4o improves the correctness of GPT-4o by 12%. In human evaluations, experts preferred OpenScholar-8B and OpenScholar-GPT-4o responses over expert-written ones 51% and 70% of the time, respectively, compared with 32% for GPT-4o. We open-source all artefacts, including our code, models, data store, datasets and a public demo.