2025-12-04 ペンシルベニア州立大学(PennState)

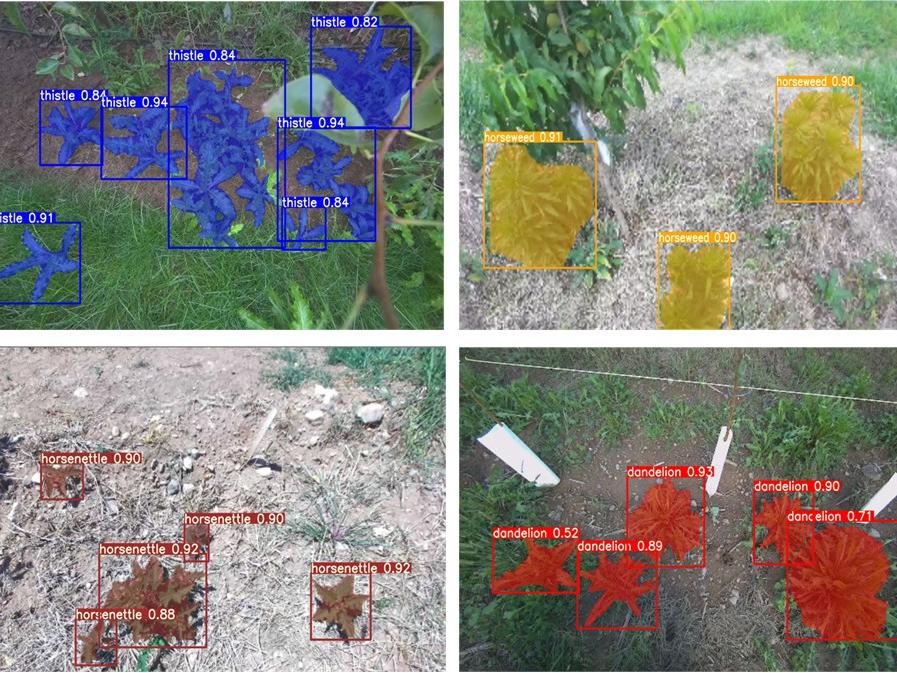

These photos show images of different weed species that the researchers trained the artificial intelligence (AI) machine vision model to recognize. That model is intended to guide an automated robotic precision herbicide spraying unit under development in the Department of Agricultural and Biological Engineering to control weeds in apple orchards. Credit: Penn State. Creative Commons

These photos show images of different weed species that the researchers trained the artificial intelligence (AI) machine vision model to recognize. That model is intended to guide an automated robotic precision herbicide spraying unit under development in the Department of Agricultural and Biological Engineering to control weeds in apple orchards. Credit: Penn State. Creative Commons

<関連情報>

- https://www.psu.edu/news/research/story/eyes-agricultural-robot-ai-system-identifies-weeds-apple-orchards

- https://www.sciencedirect.com/science/article/abs/pii/S0168169925011779

YOLOv7-CBAMとDeepSORTを用いたピクセルグリッド解析によるリンゴ園におけるリアルタイム雑草位置特定と列内密度推定 YOLOv7-CBAM and DeepSORT with pixel grid analysis for Real-Time weed localization and Intra-Row density estimation in apple orchards

Lawrence Arthur, Sadjad Mahnan, Long He, Magni Hussain, Paul Heinemann, Caio Brunharo

Computers and Electronics in Agriculture Available online: 10 October 2025

DOI:https://doi.org/10.1016/j.compag.2025.111071

Highlights

- YOLOv7_seg-CBAM achieves mAP@0.5 of 84.9% for weed segmentation and 83.6% for localization.

- Horsenettle detection excels with AP of 0.96 and Recall of 0.92 in real-time orchard video analysis.

- DeepSORT with dynamic crossline achieves MOTA of 0.82, IDF1 of 0.88 for real-time weed tracking.

- Pixel grid-based method estimates weed density at 75%, 50%, 25% coverage thresholds.

- Grid method optimizes weed density at a 50% threshold for precise spraying.

Abstract

In precision weed management, accurate detection, localization, and density estimation of weeds are crucial for effective decision-making. However, complex settings such as apple orchards, crop canopies, and low-hanging branches obstruct traditional top-view camera systems, requiring a side-view camera configuration that often leads to partial weed visibility and occlusion overlaps, resulting in misclassification or tracking loss of weeds. To address these challenges, this study enhances the YOLOv7 segmentation model with a Convolutional Block Attention Module (CBAM) for improved feature extraction and real-time detection of weed species. We integrated the DeepSORT algorithm, leveraging its robust tracking capabilities with a dynamic Kalman filtering cross-line mechanism to minimize detection loss from occlusions across frames. The enhanced model achieved a mean Average Precision (mAP) of 84.9% for segmentation and 83.6% for localization, while tracking performance showed a Multiple Object Tracking Accuracy (MOTA) of 0.82, Multiple Object Tracking Precision (MOTP) of 0.78, and an Identification F1-score (IDF1) of 0.88, with only six identity switches. Additionally, a novel pixel grid-based method estimates weed density at 75%, 50%, and 25% mask coverage thresholds, delivering a detailed and actionable assessment of the weed severity baseline. The effective quantification and enhanced detection and tracking capabilities of the model imply that precision weed management decisions in apple orchards can be significantly improved.