2024-11-20 オークリッジ国立研究所(ORNL)

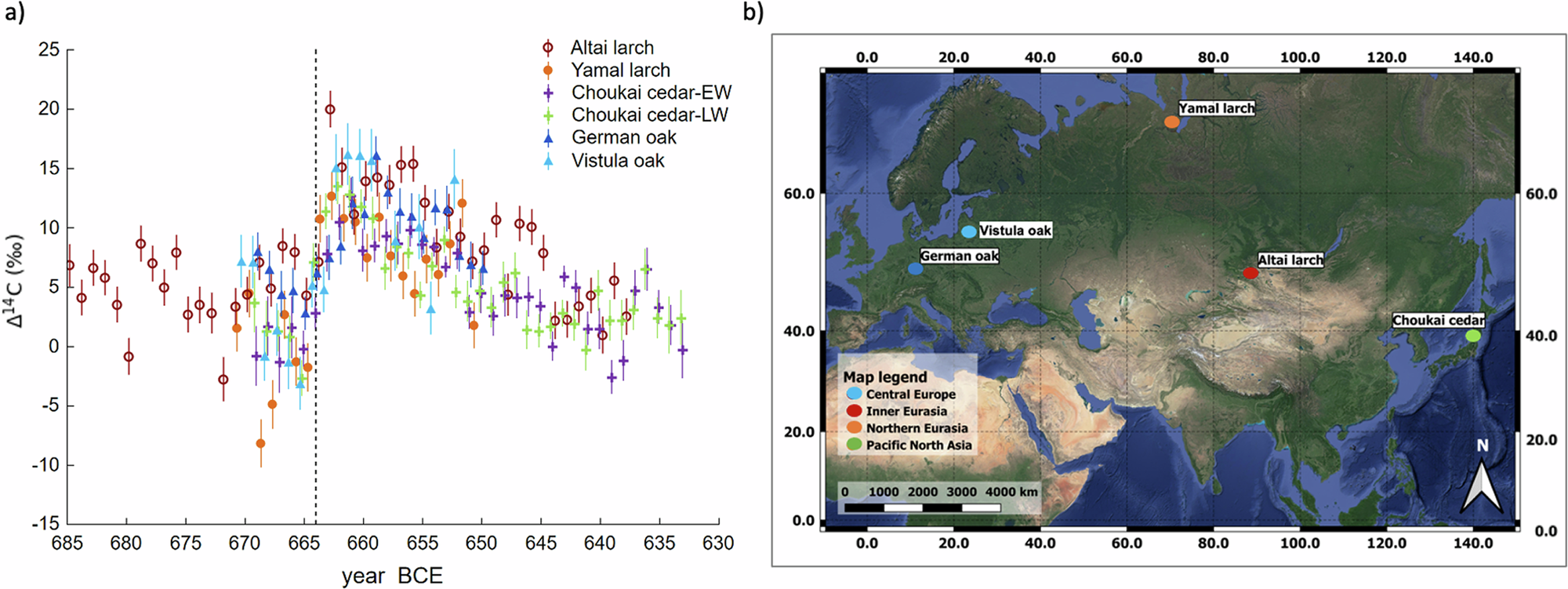

A small sample from the Frontier simulations reveals the evolution of the expanding universe in a region containing a massive cluster of galaxies from billions of years ago to present day (left). Red areas show hotter gasses, with temperatures reaching 100 million Kelvin or more. Zooming in (right), star tracer particles track the formation of galaxies and their movement over time. Credit: Argonne National Laboratory, U.S Dept of Energy

<関連情報>

- https://www.ornl.gov/news/record-breaking-run-frontier-sets-new-bar-simulating-universe-exascale-era

宇宙はずっと大きくなった ― 少なくともコンピューターシミュレーションの世界ではそうだ。

The universe just got a whole lot bigger — or at least in the world of computer simulations, that is.

In early November, researchers at the Department of Energy’s Argonne National Laboratory used the fastest supercomputer on the planet to run the largest astrophysical simulation of the universe ever conducted.

The achievement was made using the Frontier supercomputer at Oak Ridge National Laboratory. The calculations set a new benchmark for cosmological hydrodynamics simulations and provide a new foundation for simulating the physics of atomic matter and dark matter simultaneously. The simulation size corresponds to surveys undertaken by large telescope observatories, a feat that until now has not been possible at this scale.

“There are two components in the universe: dark matter — which as far as we know, only interacts gravitationally — and conventional matter, or atomic matter.” said project lead Salman Habib, division director for Computational Sciences at Argonne.

“So, if we want to know what the universe is up to, we need to simulate both of these things: gravity as well as all the other physics including hot gas, and the formation of stars, black holes and galaxies,” he said. “The astrophysical ‘kitchen sink’ so to speak. These simulations are what we call cosmological hydrodynamics simulations.”

Not surprisingly, the cosmological hydrodynamics simulations are significantly more computationally expensive and much more difficult to carry out compared to simulations of an expanding universe that only involve the effects of gravity.

“For example, if we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you’re talking about looking at huge chunks of time — billions of years of expansion,” Habib said. “Until recently, we couldn’t even imagine doing such a large simulation like that except in the gravity-only approximation.”

The supercomputer code used in the simulation is called HACC, short for Hardware/Hybrid Accelerated Cosmology Code. It was developed around 15 years ago for petascale machines. In 2012 and 2013, HACC was a finalist for the Association for Computing Machinery’s Gordon Bell Prize in computing.

Later, HACC was significantly upgraded as part of ExaSky, a special project led by Habib within the Exascale Computing Project, or ECP — a $1.8 billion DOE initiative that ran from 2016 to 2024. The project brought together thousands of experts to develop advanced scientific applications and software tools for the upcoming wave of exascale-class supercomputers capable of performing more than a quintillion, or a billion-billion, calculations per second.

As part of ExaSky, the HACC research team spent the last seven years adding new capabilities to the code and re-optimizing it to run on exascale machines powered by GPU accelerators. A requirement of the ECP was for codes to run approximately 50 times faster than they could before on Titan, the fastest supercomputer at the time of the ECP’s launch. Running on the exascale-class Frontier supercomputer, HACC was nearly 300 times faster than the reference run.

The novel simulations achieved its record-breaking performance by using approximately 9,000 of Frontier’s compute nodes, powered by AMD Instinct™ MI250X GPUs. Frontier is located at ORNL’s Oak Ridge Leadership Computing Facility, or OLCF.

“It’s not only the sheer size of the physical domain, which is necessary to make direct comparison to modern survey observations enabled by exascale computing,” said Bronson Messer, OLCF director of science. “It’s also the added physical realism of including the baryons and all the other dynamic physics that makes this simulation a true tour de force for Frontier.”

In addition to Habib, the HACC team members involved in the achievement and other simulations building up to the work on Frontier include Michael Buehlmann, JD Emberson, Katrin Heitmann, Patricia Larsen, Adrian Pope, Esteban Rangel and Nicholas Frontiere who led the Frontier simulations.

Prior to runs on Frontier, parameter scans for HACC were conducted on the Perlmutter supercomputer at the National Energy Research Scientific Computing Center, or NERSC, at Lawrence Berkeley National Laboratory. HACC was also run at scale on the exascale-class Aurora supercomputer at Argonne Leadership Computing Facility, or ALCF.

OLCF, ALCF and NERSC are DOE Office of Science user facilities.

This research was supported by the ECP and the Advanced Scientific Computing Research and High Energy Physics programs under the DOE Office of Science.

UT-Battelle manages ORNL for DOE’s Office of Science, the single largest supporter of basic research in the physical sciences in the United States. DOE’s Office of Science is working to address some of the most pressing challenges of our time. For more information,