2025-10-08 ノースカロライナ州立大学(NC State)

<関連情報>

- https://news.ncsu.edu/2025/10/ai-privacy-hardware-vulnerability/

- https://arxiv.org/abs/2507.17033

- https://arxiv.org/pdf/2507.17033

GATEBLEED: オンコアアクセラレータのパワーゲーティングを活用したAIへの高性能化とステルス攻撃 GATEBLEED: Exploiting On-Core Accelerator Power Gating for High Performance & Stealthy Attacks on AI

Joshua Kalyanapu, Farshad Dizani, Darsh Asher, Azam Ghanbari, Rosario Cammarota, Aydin Aysu, Samira Mirbagher Ajorpaz

arXiv last revised: 2 Oct 2025 (this version, v3)

DOI:https://doi.org/10.48550/arXiv.2507.17033

Abstract

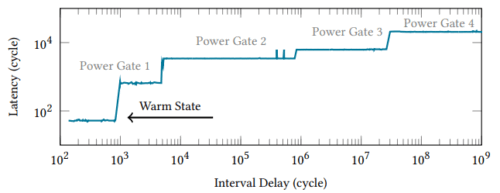

As power consumption from AI training and inference continues to increase, AI accelerators are being integrated directly into the CPU. Intel’s Advanced Matrix Extensions (AMX) is one such example, debuting on the 4th generation Intel Xeon Scalable CPU. We discover a timing side and covert channel, GATEBLEED, caused by the aggressive power gating utilized to keep the CPU within operating limits. We show that the GATEBLEED side channel is a threat to AI privacy as many ML models such as transformers and CNNs make critical computationally-heavy decisions based on private values like confidence thresholds and routing logits. Timing delays from selective powering down of AMX components mean that each matrix multiplication is a potential leakage point when executed on the AMX accelerator. Our research identifies over a dozen potential gadgets across popular ML libraries (HuggingFace, PyTorch, TensorFlow, etc.), revealing that they can leak sensitive and private information. GATEBLEED poses a risk for local and remote timing inference, even under previous protective measures. GATEBLEED can be used as a high performance, stealthy remote covert channel and a generic magnifier for timing transmission channels, capable of bypassing traditional cache defenses to leak arbitrary memory addresses and evading state of the art microarchitectural attack detectors under realistic network conditions and system configurations in which previous attacks fail. We implement an end-to-end microarchitectural inference attack on a transformer model optimized with Intel AMX, achieving a membership inference accuracy of 81% and a precision of 0.89. In a CNN-based or transformer-based mixture-of-experts model optimized with Intel AMX, we leak expert choice with 100% accuracy. To our knowledge, this is the first side-channel attack on AI privacy that exploits hardware optimizations.