2024-12-09 ミシガン大学

<関連情報>

- https://news.umich.edu/not-so-simple-machines-cracking-the-code-for-materials-that-can-learn/

- https://www.nature.com/articles/s41467-024-54849-z

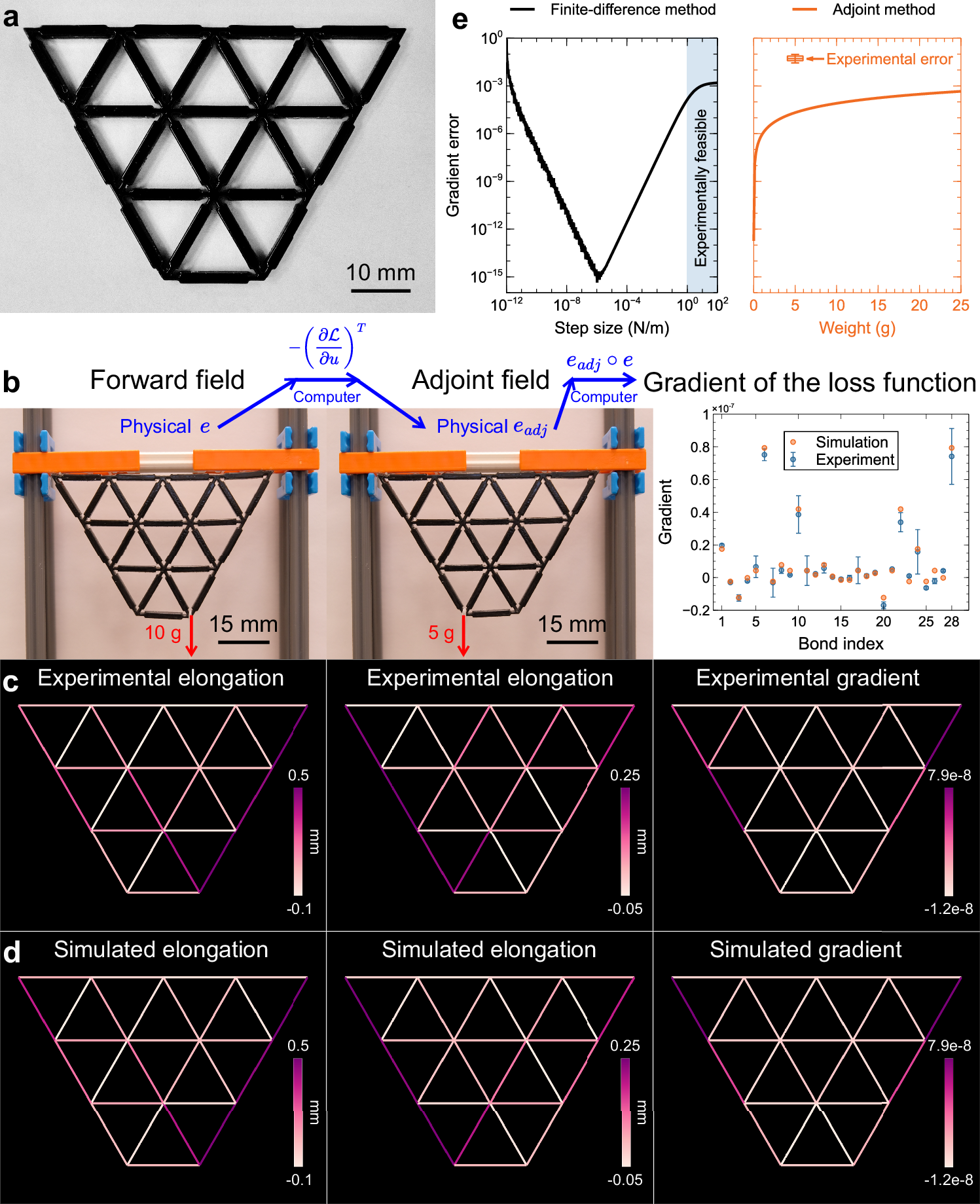

タスク学習のためのオールメカニカル・ニューラル・ネットワークのその場バックプロパゲーションによるトレーニング Training all-mechanical neural networks for task learning through in situ backpropagation

Shuaifeng Li & Xiaoming Mao

Nature Communications Published:09 December 2024

DOI:https://doi.org/10.1038/s41467-024-54849-z

Abstract

Recent advances unveiled physical neural networks as promising machine learning platforms, offering faster and more energy-efficient information processing. Compared with extensively-studied optical neural networks, the development of mechanical neural networks remains nascent and faces significant challenges, including heavy computational demands and learning with approximate gradients. Here, we introduce the mechanical analogue of in situ backpropagation to enable highly efficient training of mechanical neural networks. We theoretically prove that the exact gradient can be obtained locally, enabling learning through the immediate vicinity, and we experimentally demonstrate this backpropagation to obtain gradient with high precision. With the gradient information, we showcase the successful training of networks in simulations for behavior learning and machine learning tasks, achieving high accuracy in experiments of regression and classification. Furthermore, we present the retrainability of networks involving task-switching and damage, demonstrating the resilience. Our findings, which integrate the theory for training mechanical neural networks and experimental and numerical validations, pave the way for mechanical machine learning hardware and autonomous self-learning material systems.