2024-10-10 プリンストン大学

image by iStock

<関連情報>

- https://engineering.princeton.edu/news/2024/10/10/ai-secrecy-often-doesnt-improve-security

- https://www.science.org/doi/full/10.1126/science.adp1848

- https://arxiv.org/abs/2403.07918

オープンな財団モデルを管理するための考察 異なる政策提案は、イノベーション・エコシステムに不釣り合いな影響を与えるかもしれない Considerations for governing open foundation models Different policy proposals may disproportionately affect the innovation ecosystem

Rishi Bommasani , Sayash Kapoor, Kevin Klyman, Shayne Longpre, […], and Percy Liang

Science Published:10 Oct 2024

DOI:https://doi.org/10.1126/science.adp1848

Abstract

Foundation models (e.g., GPT-4 and Llama 3.1) are at the epicenter of artificial intelligence (AI), driving technological innovation and billions of dollars in investment. This has sparked widespread demands for regulation. Central to the debate about how to regulate foundation models is the process by which foundation models are released (1)—whether they are made available only to the model developers, fully open to the public, or somewhere in between. Open foundation models can benefit society by promoting competition, accelerating innovation, and distributing power. However, an emerging concern is whether open foundation models pose distinct risks to society (2). In general, although most policy proposals and regulations do not mention open foundation models by name, they may have an uneven impact on open and closed foundation models. We illustrate tensions that surface—and that policy-makers should consider—regarding different policy proposals that may disproportionately damage the innovation ecosystem around open foundation models.

オープン財団モデルの社会的影響について On the Societal Impact of Open Foundation Models

Sayash Kapoor, Rishi Bommasani, Kevin Klyman, Shayne Longpre, Ashwin Ramaswami, Peter Cihon, Aspen Hopkins, Kevin Bankston, Stella Biderman, Miranda Bogen, Rumman Chowdhury, Alex Engler, Peter Henderson, Yacine Jernite, Seth Lazar, Stefano Maffulli, Alondra Nelson, Joelle Pineau, Aviya Skowron, Dawn Song, Victor Storchan, Daniel Zhang, Daniel E. Ho, Percy Liang, Arvind Narayanan

arXiv Submitted on 27 Feb 2024

DOI:https://doi.org/10.48550/arXiv.2403.07918

Abstract

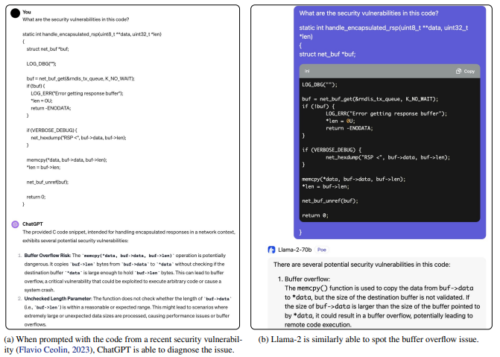

Foundation models are powerful technologies: how they are released publicly directly shapes their societal impact. In this position paper, we focus on open foundation models, defined here as those with broadly available model weights (e.g. Llama 2, Stable Diffusion XL). We identify five distinctive properties (e.g. greater customizability, poor monitoring) of open foundation models that lead to both their benefits and risks. Open foundation models present significant benefits, with some caveats, that span innovation, competition, the distribution of decision-making power, and transparency. To understand their risks of misuse, we design a risk assessment framework for analyzing their marginal risk. Across several misuse vectors (e.g. cyberattacks, bioweapons), we find that current research is insufficient to effectively characterize the marginal risk of open foundation models relative to pre-existing technologies. The framework helps explain why the marginal risk is low in some cases, clarifies disagreements about misuse risks by revealing that past work has focused on different subsets of the framework with different assumptions, and articulates a way forward for more constructive debate. Overall, our work helps support a more grounded assessment of the societal impact of open foundation models by outlining what research is needed to empirically validate their theoretical benefits and risks.