2024-11-15 コロンビア大学

<関連情報>

- https://www.engineering.columbia.edu/about/news/advancing-robotics-power-touch

- https://arxiv.org/abs/2410.24091

- https://www.nature.com/articles/s41586-019-1234-z

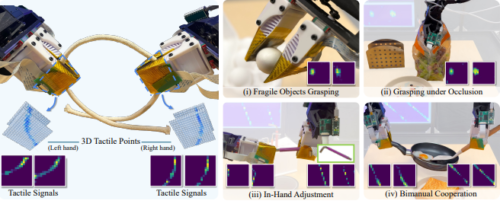

3D-ViTac: 視覚触覚センシングによるきめ細かな操作の学習 3D-ViTac: Learning Fine-Grained Manipulation with Visuo-Tactile Sensing

Binghao Huang, Yixuan Wang, Xinyi Yang, Yiyue Luo, Yunzhu Li

arXive Submitted on 31 Oct 2024

DOI:https://doi.org/10.48550/arXiv.2410.24091

Abstract

Tactile and visual perception are both crucial for humans to perform fine-grained interactions with their environment. Developing similar multi-modal sensing capabilities for robots can significantly enhance and expand their manipulation skills. This paper introduces \textbf{3D-ViTac}, a multi-modal sensing and learning system designed for dexterous bimanual manipulation. Our system features tactile sensors equipped with dense sensing units, each covering an area of 3mm2. These sensors are low-cost and flexible, providing detailed and extensive coverage of physical contacts, effectively complementing visual information. To integrate tactile and visual data, we fuse them into a unified 3D representation space that preserves their 3D structures and spatial relationships. The multi-modal representation can then be coupled with diffusion policies for imitation learning. Through concrete hardware experiments, we demonstrate that even low-cost robots can perform precise manipulations and significantly outperform vision-only policies, particularly in safe interactions with fragile items and executing long-horizon tasks involving in-hand manipulation. Our project page is available at \url{this https URL}.

スケーラブルな触覚グローブを用いた人間の把持のシグネチャの学習 Learning the signatures of the human grasp using a scalable tactile glove

Subramanian Sundaram,Petr Kellnhofer,Yunzhu Li,Jun-Yan Zhu,Antonio Torralba & Wojciech Matusik

Nature Published:29 May 2019

DOI:https://doi.org/10.1038/s41586-019-1234-z

Abstract

Humans can feel, weigh and grasp diverse objects, and simultaneously infer their material properties while applying the right amount of force—a challenging set of tasks for a modern robot1. Mechanoreceptor networks that provide sensory feedback and enable the dexterity of the human grasp2 remain difficult to replicate in robots. Whereas computer-vision-based robot grasping strategies3,4,5 have progressed substantially with the abundance of visual data and emerging machine-learning tools, there are as yet no equivalent sensing platforms and large-scale datasets with which to probe the use of the tactile information that humans rely on when grasping objects. Studying the mechanics of how humans grasp objects will complement vision-based robotic object handling. Importantly, the inability to record and analyse tactile signals currently limits our understanding of the role of tactile information in the human grasp itself—for example, how tactile maps are used to identify objects and infer their properties is unknown6. Here we use a scalable tactile glove and deep convolutional neural networks to show that sensors uniformly distributed over the hand can be used to identify individual objects, estimate their weight and explore the typical tactile patterns that emerge while grasping objects. The sensor array (548 sensors) is assembled on a knitted glove, and consists of a piezoresistive film connected by a network of conductive thread electrodes that are passively probed. Using a low-cost (about US$10) scalable tactile glove sensor array, we record a large-scale tactile dataset with 135,000 frames, each covering the full hand, while interacting with 26 different objects. This set of interactions with different objects reveals the key correspondences between different regions of a human hand while it is manipulating objects. Insights from the tactile signatures of the human grasp—through the lens of an artificial analogue of the natural mechanoreceptor network—can thus aid the future design of prosthetics7, robot grasping tools and human–robot interactions1,8,9,10.